Pop icon Taylor Swift became the latest target of deepfakes as her AI-generated sexually explicit images flooded social media platform X this week. The deepfakes, which amassed millions of views, became a reason for outcry as politicians and tech CEOs jumped in to blast the development.

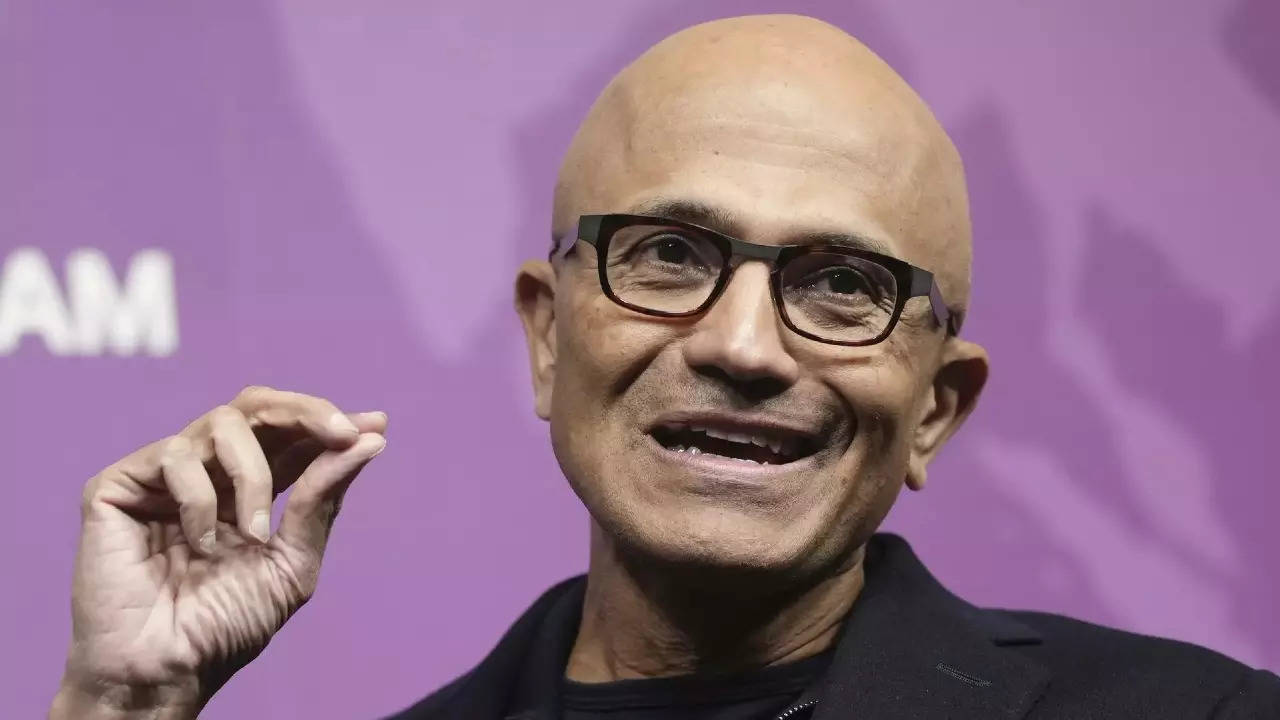

Microsoft CEO Satya Nadella was among those who called the images “alarming and terrible,” and said “I think it behooves us to move fast on this.” The case raises concerns about online safety and the ethical implications of AI technology.

Here’s what Nadella said in a interview NBC

I would say two things: One, is again I go back to what I think’s our responsibility, which is all of the guardrails that we need to place around the technology so that there’s more safe content that’s being produced. And there’s a lot to be done and a lot being done there. But it is about global, societal — you know, I’ll say, convergence on certain norms. And we can do — especially when you have law and law enforcement and tech platforms that can come together — I think we can govern a lot more than we think— we give ourselves credit for.

Nadella comments are important in this case because reports suggest that the images originated on a Telegram channel known for sharing AI-generated pornography. As per a report by 404 Media these sexually explicit AI-generated images of Taylor Swift were made by a tool: free Microsoft text-to-image AI generator.

While AI tools refuse to create such images but several studies and researches in the past have shown that these AI tools can be ‘fooled’ into producing such images if the person using them inputs relatively tweaked prompts.

Meanwhile, fans of Taylor Swift and politicians, including the White House, expressed outrage at the AI-generated fake porn images of the pop star. One image of the US megastar was seen 47 million times on X, a report said.

Microsoft CEO Satya Nadella was among those who called the images “alarming and terrible,” and said “I think it behooves us to move fast on this.” The case raises concerns about online safety and the ethical implications of AI technology.

Here’s what Nadella said in a interview NBC

I would say two things: One, is again I go back to what I think’s our responsibility, which is all of the guardrails that we need to place around the technology so that there’s more safe content that’s being produced. And there’s a lot to be done and a lot being done there. But it is about global, societal — you know, I’ll say, convergence on certain norms. And we can do — especially when you have law and law enforcement and tech platforms that can come together — I think we can govern a lot more than we think— we give ourselves credit for.

Nadella comments are important in this case because reports suggest that the images originated on a Telegram channel known for sharing AI-generated pornography. As per a report by 404 Media these sexually explicit AI-generated images of Taylor Swift were made by a tool: free Microsoft text-to-image AI generator.

While AI tools refuse to create such images but several studies and researches in the past have shown that these AI tools can be ‘fooled’ into producing such images if the person using them inputs relatively tweaked prompts.

Meanwhile, fans of Taylor Swift and politicians, including the White House, expressed outrage at the AI-generated fake porn images of the pop star. One image of the US megastar was seen 47 million times on X, a report said.