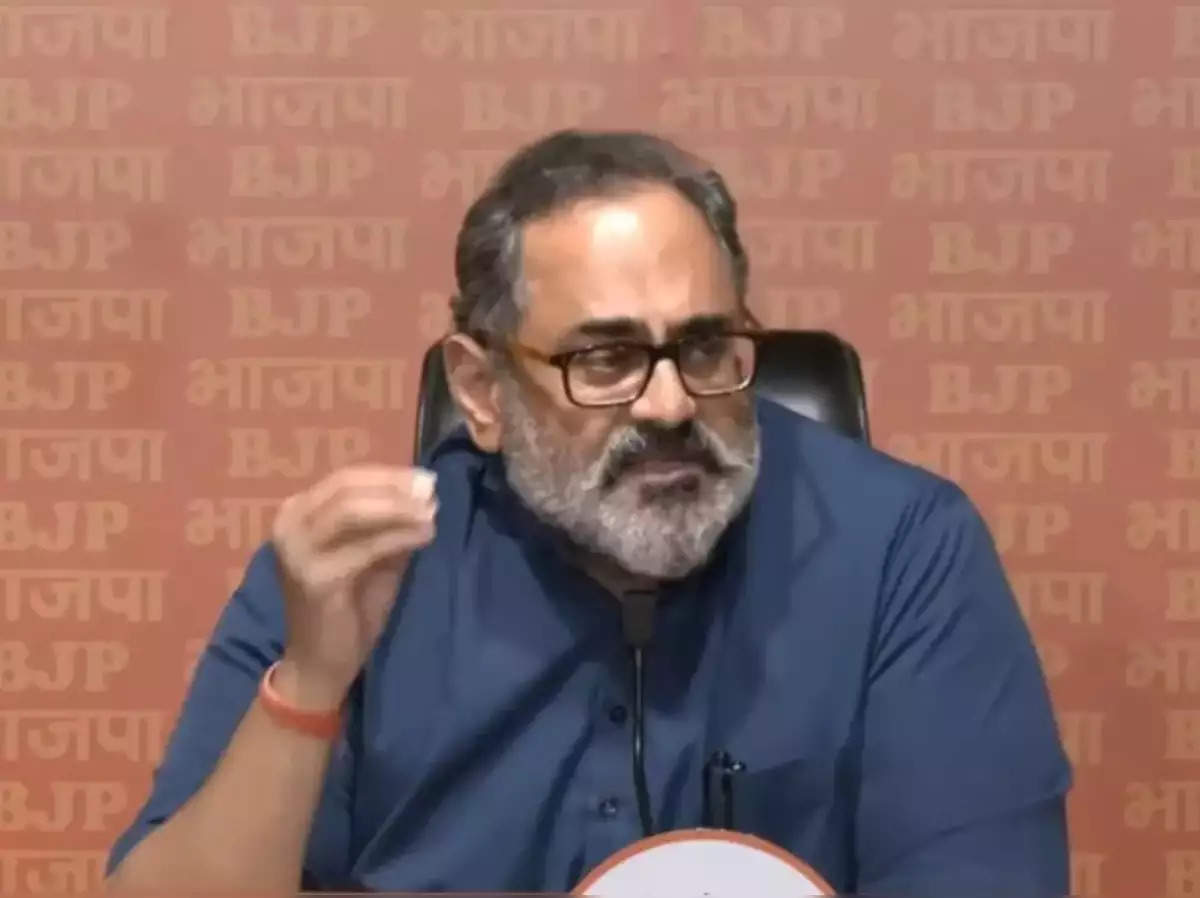

Platforms must now seek government approval for deploying untested AI models and clearly label their potential unreliability, IT minister Rajeev Chandrasekhar said days after Google’s AI platform, Gemini, generated controversial responses to queries about Prime Minister Modi.

“The episode of Google Gemini is very embarrassing, but saying that the platform was under trial and unreliable is certainly not an excuse to escape from prosecution,” the minister said.

The government considers this a violation of IT laws and emphasises transparent user consent before deploying such models.

“I advise all platforms to openly disclose to the consumers and seek consent from them before deploying any under-trial, erroneous platforms on the Indian public internet. Nobody can escape accountability by apologising later. Every platform on the Indian internet is obliged to be safe and trusted,” he added.

The advisory asks the entities to seek approval from the government for deploying under trial or unreliable artificial intelligence (AI) models.

“Use of under-testing / unreliable Artificial Intelligence model(s) /LLM/Generative AI, software(s) or algorithm(s) and its availability to the users on Indian Internet must be done so with the explicit permission of the Government of India and be deployed only after appropriately labelling the possible and inherent fallibility or unreliability of the output generated,” it said.

This follows a December 2023 advisory addressing deepfakes and misinformation.

Government makes labelling mandatory

The government has issued an advisory requiring all tech companies working on development of AI models to ask for permission before launching in India. The centre has also asked social media companies to label under-trial AI models, essentially preventing them from hosting illegal content.

“All intermediaries or platforms to ensure that use of Artificial Intelligence model(s) /LLM/Generative AI, software(s) or algorithm(s) on or through its computer resource does not permit its users to host, display, upload, modify, publish, transmit, store, update or share any unlawful content,” the advisory said.

In an advisory issued to intermediaries/platforms on March 1, the Ministry of Electronics and Information Technology also warns of criminal action in case of non-compliance. Platforms are responsible for any breaches and shall be held accountable for any breaches, it added.

“Non-compliance with provisions would result in penal consequences,” the advisory said.

“The episode of Google Gemini is very embarrassing, but saying that the platform was under trial and unreliable is certainly not an excuse to escape from prosecution,” the minister said.

The government considers this a violation of IT laws and emphasises transparent user consent before deploying such models.

“I advise all platforms to openly disclose to the consumers and seek consent from them before deploying any under-trial, erroneous platforms on the Indian public internet. Nobody can escape accountability by apologising later. Every platform on the Indian internet is obliged to be safe and trusted,” he added.

The advisory asks the entities to seek approval from the government for deploying under trial or unreliable artificial intelligence (AI) models.

“Use of under-testing / unreliable Artificial Intelligence model(s) /LLM/Generative AI, software(s) or algorithm(s) and its availability to the users on Indian Internet must be done so with the explicit permission of the Government of India and be deployed only after appropriately labelling the possible and inherent fallibility or unreliability of the output generated,” it said.

This follows a December 2023 advisory addressing deepfakes and misinformation.

Government makes labelling mandatory

The government has issued an advisory requiring all tech companies working on development of AI models to ask for permission before launching in India. The centre has also asked social media companies to label under-trial AI models, essentially preventing them from hosting illegal content.

“All intermediaries or platforms to ensure that use of Artificial Intelligence model(s) /LLM/Generative AI, software(s) or algorithm(s) on or through its computer resource does not permit its users to host, display, upload, modify, publish, transmit, store, update or share any unlawful content,” the advisory said.

In an advisory issued to intermediaries/platforms on March 1, the Ministry of Electronics and Information Technology also warns of criminal action in case of non-compliance. Platforms are responsible for any breaches and shall be held accountable for any breaches, it added.

“Non-compliance with provisions would result in penal consequences,” the advisory said.